Google’s AI Overviews Spark Widespread Backlash and Debate

In the relentless pursuit of a more intuitive and intelligent web, Google has consistently positioned itself at the bleeding edge of innovation. Its most ambitious leap yet came with the integration of generative AI directly into its core search product, a feature branded as “AI Overviews.” Designed to summarize the internet and provide instant, conversational answers to complex queries, it was heralded as the future of information retrieval. However, within a remarkably short time, this future arrived shrouded in controversy. Instead of universal acclaim, Google’s AI Overviews faced a vehement and widespread backlash, raising profound questions about the ethics, accuracy, and ultimate utility of AI-driven search. This article delves deep into the genesis of this feature, the specific failures that triggered public outcry, the broader implications for the digital ecosystem, and what it all means for the future of how we find information.

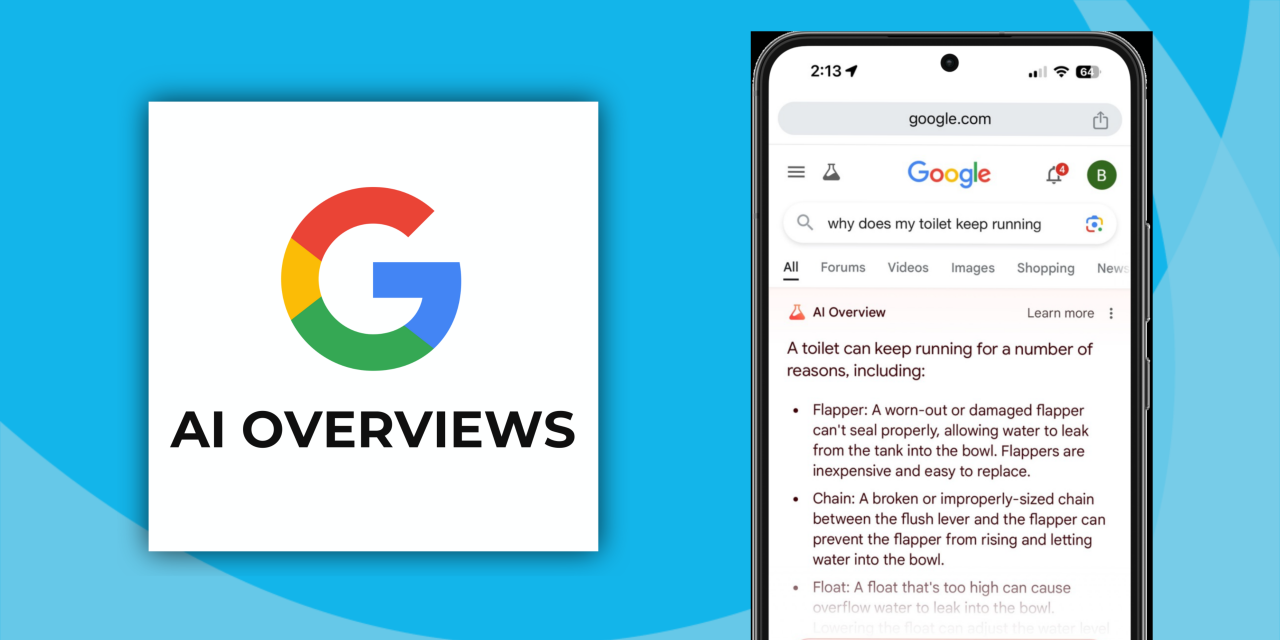

A. Understanding the Technology: What Are Google’s AI Overviews?

Before dissecting the backlash, it’s crucial to understand what AI Overviews are and what they were intended to replace. For decades, Google’s search results were a simple, familiar list of blue links—the “10 blue links” model. Users would type a query, and the search engine would return a series of websites deemed most relevant, leaving the user to parse through them to find the answer.

AI Overviews, powered by Google’s sophisticated Gemini AI model, aim to obliterate that model. Instead of links, the goal is to provide a single, definitive answer box at the very top of the search results page. This AI-generated paragraph synthesizes information from various sources across the web to directly answer the user’s question, whether it’s “what’s the best way to clean a leather couch?” or “explain the causes of the Franco-Prussian War.”

The stated benefits are compelling:

-

Speed and Convenience: Users get an immediate answer without clicking through multiple websites.

-

Synthesis of Complex Topics: The AI can pull together disparate pieces of information to create a coherent summary on nuanced subjects.

-

Conversational Queries: It better understands natural, long-tail questions rather than just keyword matching.

However, this very design contains the seeds of its biggest problems: the AI must not only find information but also interpret, contextualize, and present it accurately without human oversight.

B. The Core of the Controversy: When AI Gets It Dangerously Wrong

The backlash wasn’t born from philosophical debate but from a flood of concrete, and often hilarious, examples of the feature failing spectacularly. Users began stress-testing the system, and the results were shared widely across social media, eroding trust in the feature.

1. The Proliferation of Dangerous and Absurd Advice:

The most damaging failures were those that posed real-world risks to health, safety, and common sense. Some infamous examples included:

-

Health Hazards: When asked how to cheese to stick to pizza better, the AI Overview allegedly suggested using non-toxic glue, a recommendation it apparently drew from a years-old sarcastic comment on a forum like Reddit. Another query about passing a kidney stone quickly yielded advice suggesting drinking its own urine.

-

Historical Inaccuracies: The AI confidently declared that former U.S. President Andrew Jackson earned a college degree in 2005, despite him dying in 1845. This was a clear failure to distinguish between factual historical records and unrelated modern web content.

-

Nonsensical Logic: In a now-legendary failure, when asked how many rocks to eat each day, the AI Overview cited a satirical article from The Onion and sincerely advised users to eat at least one small rock per day for its “vitamin content.”

2. The “Data Laundering” Problem:

At the heart of these errors is a critical flaw known as “data laundering.” AI language models are trained on the entire corpus of the internet—the good, the bad, and the ugly. This includes highly authoritative sources like scientific journals and reputable news outlets, but also fringe blogs, satirical websites, and chaotic user-generated forums where irony and misinformation thrive.

The AI lacks the true human understanding to consistently differentiate a peer-reviewed fact from a joke made in poor taste. It processes all text as data to be synthesized. Therefore, a sarcastic Reddit comment, stripped of its humorous intent, can be elevated to the status of a factual answer, presented by Google with the implicit authority of its brand. This laundering of junk data into a polished, confident summary is perhaps the most significant technical and ethical challenge.

3. The Attribution and “Link Rot” Issue:

Google includes small links beneath the AI Overview that supposedly source the information. However, users and publishers quickly noticed problems:

-

Misattribution: The linked sources often did not, in fact, contain the information the AI claimed they did. The AI would hallucinate a fact and incorrectly attribute it to a reputable source.

-

Insufficient Credit: Even when correct, the AI summarizes the key information, drastically reducing the incentive for a user to click through to the original publisher’s website. This leads to the next major area of backlash.

C. The Publisher Panic: Starving the Web’s Content Ecosystem

Beyond the factual errors, a seismic wave of concern rippled through the entire digital content industry—from major news corporations to individual bloggers. The fundamental business model of the web for 25 years has been: create valuable content -> rank on Google -> get traffic -> earn revenue via advertising or subscriptions.

AI Overviews threaten to collapse this model. If Google provides the answer directly at the top of the page, the “zero-click search” becomes the ultimate goal. Why would a user click on a recipe website if the AI lists all the ingredients and steps? Why click on a tech review site if the AI summarizes the pros and cons of the latest laptop?

This creates a catastrophic scenario for publishers:

-

Plummeting Traffic: Early analyses suggested that AI Overviews could siphon away up to 25% of all click-through traffic from traditional search results.

-

Erosion of Revenue: Less traffic directly translates to lower ad revenue (from adsense and other networks) and fewer subscriptions, making quality journalism and content creation financially unsustainable.

-

Uncompensated Labor: Publishers argue that their hard work and investment in creating content is being used to train and feed Google’s AI, which then repackages that work without compensation or adequate attribution, ultimately cutting them off from their audience.

D. Google’s Response and Damage Control

Facing a perfect storm of mockery and genuine concern, Google was forced to respond. The company’s reaction highlighted both the known limitations of the technology and the speed at which it is being deployed.

-

Acknowledging the Errors: Google stated that the vast majority of AI Overviews were accurate and that the examples circulating online were “rare queries.” They also emphasized that the feature was still in its experimental “Labs” phase, despite being rolled out to hundreds of millions of users.

-

Implementing Quick Fixes: The company announced swift technical adjustments, including:

-

Adding Trigger Restrictions: Limiting the use of AI Overviews for certain “nonsensical” queries and those where satirical content is prevalent.

-

Enhancing Detection Systems: Improving algorithms to better identify and filter out user-generated content that is low-quality or humorous.

-

Strengthening Safeguards: Adding harder stops for advice in the “Your Money or Your Life” (YMYL) categories, such as health and financial information.

-

-

The Underlying Tension: Despite these fixes, the response underscores a fundamental tension: the desire to deploy flashy, market-leading AI technology quickly versus the imperative to ensure it is safe, reliable, and responsible.

E. The Broader Implications: Trust, Ethics, and the Future of Search

The backlash against AI Overviews is not an isolated incident but a symptom of a larger societal negotiation with powerful AI.

-

The Erosion of Trust: Google’s brand has been built on the promise of organizing the world’s information and making it universally accessible and useful. When its primary product begins dispensing dangerous misinformation, even occasionally, it corrodes that foundational trust. Users can no longer take the top result at face value, leading to a new kind of digital literacy burden.

-

The “Enshittification” Argument: Critics like Cory Doctorow argue this is a classic example of “enshittification,” where a platform (Google Search) becomes so dominant that it can start making its product worse for its users (by pushing unreliable AI answers) and its partners (by taking their traffic) to enrich itself, confident that users have no viable alternative.

-

The Existential Question for SEO and Content: The entire search engine optimization industry must now grapple with an existential question: if clicks are being deliberately suppressed by the search engine itself, what is the future of creating online content? The answer may lie in creating even more unique, experiential, and authoritative content that an AI cannot easily summarize a tall order for most creators.

F. Navigating the New Search Landscape: A User’s Guide

In this new era, users must adopt more critical thinking than ever before when using a search engine.

A. Never Trust an AI Overview Implicitly. Treat it as a starting point, not a definitive source, especially for critical information.

B. Check the Sources. Click on the links provided. Verify that the source actually says what the AI claims it says.

C. Look Beyond the Summary. Actively scroll past the AI Overview to view the traditional “10 blue links” to get a broader perspective from multiple websites.

D. Report Bad Answers. Use Google’s feedback tools to report harmful or inaccurate AI Overviews. This provides valuable training data.

E. Consider Alternative Search Engines. The controversy has driven renewed interest in privacy-focused and alternative search engines like DuckDuckGo, Brave Search, and Perplexity AI, which have different approaches to AI integration.

Conclusion: A Pivotal Moment for AI and Information

The fierce backlash against Google’s AI Overviews represents a pivotal moment. It is a stark reminder that artificial intelligence, for all its power, remains a fragile tool one that is prone to inheriting and amplifying the biases, errors, and absurdities of its training data. Google’s stumble demonstrates the immense difficulty of automating authority and contextual understanding.

The path forward is fraught with challenges. Google must find a way to balance breakneck innovation with unwavering responsibility, ensuring its pursuit of AI supremacy does not come at the cost of user safety, publisher livelihoods, and its own hard-earned trust. For users and creators, the message is clear: the age of passive information consumption is over. In the AI-driven web, vigilance, skepticism, and critical thinking are no longer optional skills they are essential for survival. The conversation started by this backlash will undoubtedly shape the evolution of search and AI for years to come.