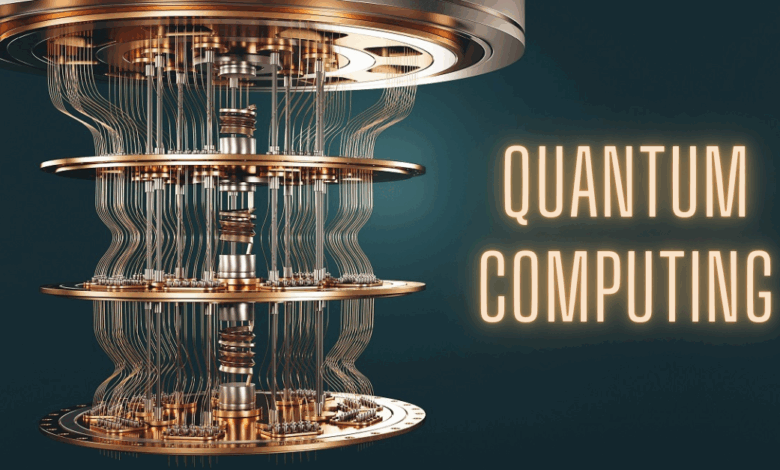

Quantum Computing Achieves Unprecedented Milestone in Error Correction

The long-anticipated revolution in computing has just crossed a critical threshold. In a stunning development that has sent ripples through the scientific community, researchers have announced a monumental breakthrough in quantum computing, successfully demonstrating that it is possible to suppress errors rather than just correct them after they occur. This pivotal achievement marks a fundamental shift from theoretical possibility to tangible, scalable reality, bringing us closer to the era where quantum computers solve problems deemed impossible for classical supercomputers.

For decades, the promise of quantum computing has been shadowed by a formidable obstacle: fragility. Quantum bits, or qubits, the fundamental units of quantum information, are incredibly susceptible to interference from their environment a phenomenon known as decoherence. Heat, vibrations, and even stray electromagnetic waves can cause qubits to lose their quantum state, leading to computational errors. Until now, managing these errors required an immense number of additional qubits for correction, creating a complex and often self-defeating cycle. This new research demonstrates a method to proactively reduce errors at the source, a feat once thought to be years away.

A. Demystifying the Quantum Advantage: Why It Matters

To understand the gravity of this breakthrough, one must first grasp what sets quantum computing apart. Unlike classical computers, which use bits (0s and 1s), quantum computers use qubits that leverage the quantum mechanical principles of superposition and entanglement.

-

Superposition: A qubit can exist not just as a 0 or a 1, but as a probabilistic combination of both states simultaneously. Imagine a spinning coin; while it’s in the air, it is neither purely heads nor purely tails but exists in a state of both. This allows a quantum computer to perform vast numbers of calculations in parallel.

-

Entanglement: This is a mysterious and powerful connection where qubits become intrinsically linked. The state of one qubit instantly influences the state of another, no matter the distance between them. This enables incredibly powerful correlations and processing capabilities that are impossible for classical systems.

This unique architecture allows quantum computers to excel at specific, complex tasks such as:

-

Discovering new pharmaceuticals by simulating molecular interactions at an atomic level.

-

Creating ultra-efficient fertilizers by modeling the Haber-Bosch process.

-

Developing new materials with bespoke properties, like high-temperature superconductors.

-

Breaking current cryptographic protocols and forging new, unbreakable ones (quantum cryptography).

-

Optimizing large-scale logistical systems for finance, transportation, and supply chains.

B. The Achilles’ Heel: The Problem of Quantum Errors

The very properties that give quantum computers their power also make them notoriously unstable. A qubit in superposition is a delicate state that collapses into a definite 0 or 1 upon measurement or from the slightest environmental interference. These errors manifest in two primary forms:

-

Bit-Flip Errors: Similar to a classical bit error, where a 0 accidentally becomes a 1, or vice versa.

-

Phase-Flip Errors: A uniquely quantum error where the phase relationship between the 0 and 1 states in a superposition is flipped. This corrupts the information without necessarily changing the 0 or 1 value upon a single measurement.

Traditional error-correction methods, like the surface code, involve grouping many fragile physical qubits into a single, more stable logical qubit. The idea is to spread the information across multiple qubits so that if one fails, the others can vote to correct it. However, this requires a daunting overhead; some estimates suggested needing over 1,000 physical qubits to create a single, reliable logical qubit. This approach corrected errors after they happened but did little to prevent them, consuming immense resources.

C. The Nature of the Groundbreaking Achievement

The recent breakthrough, published in a landmark paper, turns the traditional approach on its head. Instead of simply building a larger corrective net, the teams (from leading institutions like Google Quantum AI, IBM, and others) have developed techniques to suppress the errors at the source.

The core innovation involves a combination of advanced material science, precise laser calibration, and novel control system algorithms. Researchers have found ways to:

-

Engineer More Robust Qubits: By using new superconducting materials and qubit designs (e.g., fluxonium qubits alongside traditional transmons), they have intrinsically increased the coherence time—the duration for which a qubit maintains its quantum state.

-

Implement Dynamic Decoupling: This technique uses carefully timed pulses of energy to effectively “shield” the qubits from the noisy environment, canceling out disruptive signals before they can cause decoherence.

-

Develop Predictive Control: Machine learning algorithms now predict when a qubit is likely to error and make micro-adjustments to its control signals in real-time to keep it stable, a form of quantum preventative medicine.

The result was not just a marginal improvement but an exponential reduction in the error rate. Experiments showed that by applying these suppression techniques, the error rate of a logical qubit decreased as more physical qubits were added to it. This negative scaling of error is the holy grail of quantum computing and proves that building large-scale, fault-tolerant quantum computers is not just a fantasy but a feasible engineering challenge.

D. A Comparative Look: Old vs. New Error Paradigm

The following table illustrates the fundamental shift in strategy that this breakthrough represents:

| Feature | Traditional Error Correction (Post-Correction) | New Error Suppression Paradigm |

|---|---|---|

| Core Philosophy | Correct errors after they have already occurred. | Prevent errors from happening in the first place. |

| Qubit Overhead | Extremely high. Requires 100s/1000s of physical qubits per logical qubit. | Significantly lower. Fewer physical qubits are needed for stability. |

| Primary Method | Redundancy. Using many qubits to encode information and majority voting. | Stability. Improving qubit quality, shielding, and real-time control. |

| Efficiency | Low. Most resources are dedicated to correction, not computation. | High. More resources can be dedicated to actual computational tasks. |

| Scalability | Challenging and resource-prohibitive. | Path to practical and economic scalability. |

E. The Implications: A New Dawn for Computational Power

This milestone is far more than an academic curiosity; it is the key that unlocks the next stage of quantum development.

-

Accelerated Timeline: The path to fault-tolerant quantum computing has been drastically shortened. What was projected for 2040 or beyond may now be achievable within the next decade.

-

Practical Quantum Advantage: “Quantum advantage” or “supremacy” demonstrating a quantum computer can solve a problem beyond a classical computer’s reach will now be demonstrated on more meaningful, real-world problems rather than esoteric benchmarks.

-

Industry-Wide Catalyst: Every industry poised to benefit from quantum computing from drug discovery and materials science to artificial intelligence and financial modeling must now accelerate its quantum readiness strategies. The race for quantum-ready talent and algorithms is about to intensify dramatically.

-

The Cybersecurity Imperative: The threat to current encryption (RSA, ECC) becomes more immediate. Organizations must begin the transition to quantum-resistant cryptography with renewed urgency.

F. The Road Ahead: Challenges and Future Directions

While this breakthrough is monumental, it is not the finish line. Significant challenges remain on the path to a fully operational, large-scale quantum computer.

A. Qubit Count and Quality: Increasing the number of high-quality, stable qubits is still a primary focus. Current devices have hundreds of qubits; solving world-changing problems will require millions.

B. Integration and Control: Developing the classical electronics and software control systems to manage millions of qubits simultaneously is a colossal engineering feat.

C. Software and Algorithms: The ecosystem of quantum algorithms, programming languages (like Qiskit and Cirq), and applications needs to mature in tandem with the hardware.

D. Cooling and Infrastructure: Maintaining qubits at near-absolute zero temperatures requires immense and expensive refrigeration systems (dilution refrigerators). Making this technology more accessible is a key hurdle.

The research will now focus on integrating this error suppression methodology with existing error correction codes, creating a multi-layered defense system against noise. This hybrid approach is widely seen as the most promising path forward.

Conclusion: A Paradigm Shift Unfolds

The message from the world’s top quantum labs is clear: a fundamental barrier has been overcome. The demon of quantum errors has not been slain, but it has been decisively caged. This achievement in error suppression transforms quantum computing from a laboratory experiment plagued by instability into an engineering discipline focused on scalable and reliable construction.

It stuns scientists not because it was unexpected, but because it was achieved with such efficacy and clarity. It validates decades of theoretical work and provides a clear, tangible roadmap for the future. We are witnessing the inflection point where quantum computing transitions from a promise to a palpable reality, poised to reshape our world in ways we are only beginning to imagine. The quantum future is no longer on the horizon; it is now in view, and it is arriving faster than anyone predicted.